Manufacturing Science & Technology

Transfer. Support. Optimize.

MSAT owns process knowledge across development, tech transfer, and manufacturing. Seal connects what other systems fragment.

The hardest part of MSAT work isn't analysis. It's aggregation.

Manufacturing Science and Technology is responsible for ensuring that a manufacturing process is scientifically defined, correctly implemented in cGMP operations, and remains in control throughout its lifecycle. MSAT owns the technical interpretation of process data—how development knowledge translates into GMP execution, how manufacturing issues are investigated, how process changes are justified to maintain product quality and supply. Unlike Process Development, Manufacturing Operations, or Quality, MSAT does not operate within a single system boundary. MSAT works across ELNs, MES, LIMS, QMS, historians, and statistical tools like JMP. The effectiveness of MSAT depends less on whether data exists, and more on whether it can be consistently interpreted, traced back to its origin, and reused without manual rework.

Different sites store data in different layouts. A CDMO with teams in the UK, US, and France will have three different folder structures, naming conventions, and file formats for the same type of data. Project managers use SharePoint for client visibility. Internal teams use network drives. Final analyses go straight into JMP. Some data lives in Excel, some in PDFs, some in email attachments that never made it into any system.

Early-phase projects—where R&D is still heavily involved—are the hardest. Parameters aren't settled, data sources are inconsistent, and every analysis requires hunting down context from development. Later-phase programs with 40+ batches are easier once you know which parameters to track. But getting there requires surviving the early chaos, and most organizations never build the foundation that would make later stages truly simple.

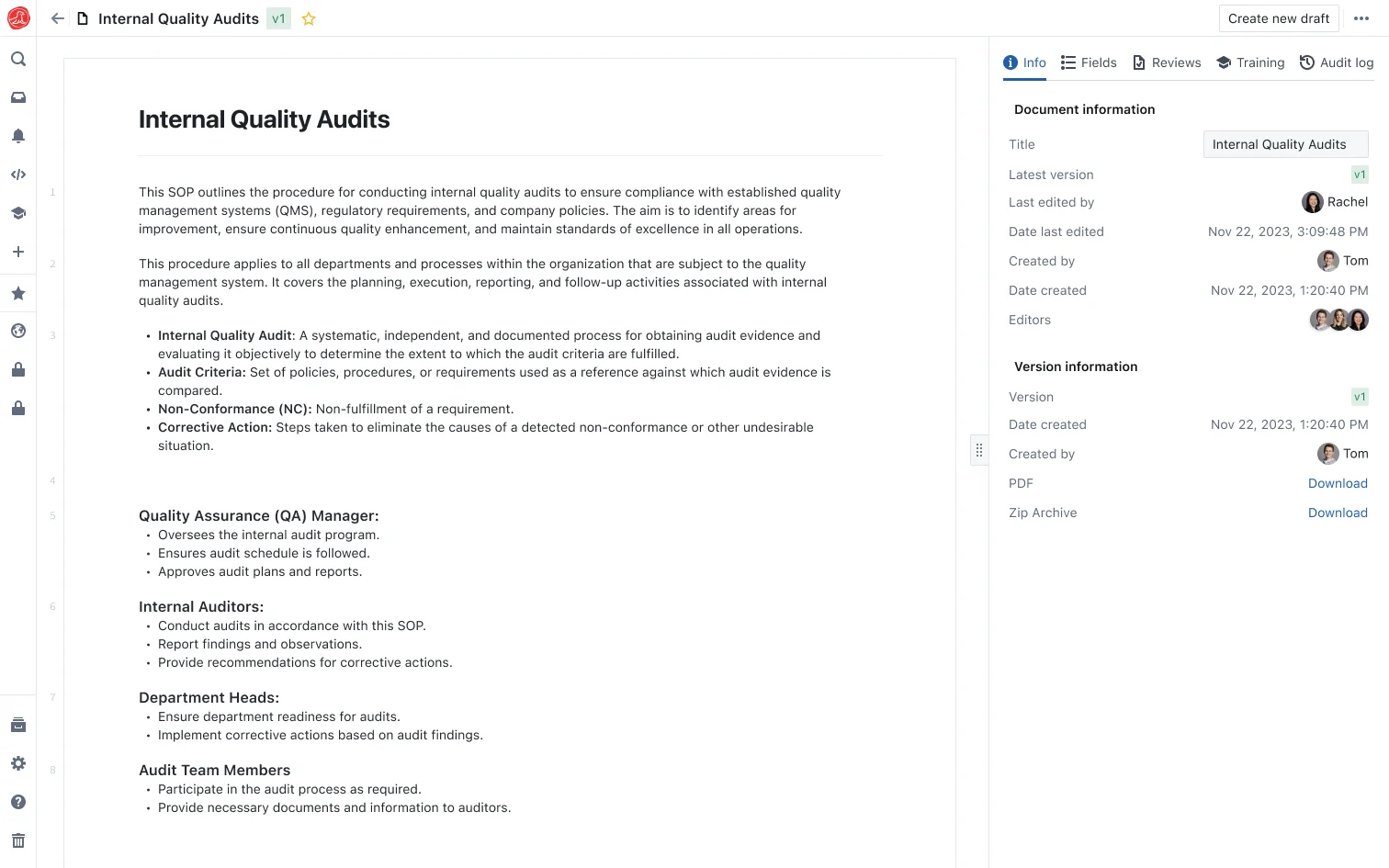

Tech transfer and the operational burden

MSAT involvement begins when a process is being defined or scaled in Process Development. Experimental data is captured in ELNs combining structured fields with free-text narrative. DOE results are shared as JMP files, Excel workbooks, or screenshots embedded in slide decks. Process descriptions, flow diagrams, and parameter justifications are maintained separately in PowerPoint or PDF reports. From an MSAT perspective, the most critical inputs are not the raw measurements themselves, but the decisions derived from them—why specific parameters were identified as CPPs, how operating ranges were established, what conditions were tested near the edge of failure. This information is typically embedded in narrative documents or discussed verbally and is not stored in a structured form that MSAT can later query. MSAT extracts values and conclusions from development artifacts into Excel trackers for tech transfer planning, and ambiguity is resolved through direct discussion rather than through a system.

Tech transfer is when MSAT becomes fully accountable for defining how the process will be executed in GMP. MSAT must combine development-defined parameters with site-specific equipment capabilities, facility constraints, risk assessments, and quality expectations into a process definition that can be implemented in master batch records. Each input arrives in a different format—development parameters in Excel summaries, equipment capabilities in engineering spreadsheets, facility constraints in SOPs, risk assessments in standalone templates. MSAT manually reconciles these to produce finalized GMP parameter sets, equipment equivalency justifications, and tech transfer protocols. Parameters are copied between files. Version control is managed through filenames. There is no system that maintains a single, authoritative process definition that carries forward into GMP execution—so parameters and assumptions are reviewed again for each transfer, and differences arise in how the same process is implemented across sites.

Once manufacturing begins, MSAT supports routine operations, deviation investigations, and process performance monitoring. Batch execution data is generated in MES, equipment behavior is recorded in historians, testing results are produced in LIMS, and deviations are captured in QMS. Although these systems are individually robust, they are not connected in a way that supports MSAT decision-making. Batch records do not reference development rationale. Deviation records do not include historical parameter trends. When a deviation occurs, MSAT exports batch data from MES, retrieves time-series data from the historian, pulls test results from LIMS, and aligns datasets manually in Excel before performing statistical analysis in JMP. Results are summarized and attached to investigation records rather than retained as reusable process knowledge. Similar issues are investigated repeatedly because prior analyses are not easily reused.

CDMOs meet with clients twice a week during active campaigns. PowerPoint updates take three hours—not because the analysis is complex, but because pulling the data together is manual. MSAT knows the data exists and what it shows, but can't present it in a form that builds client confidence without hours of manual aggregation. An MSAT engineer who's been on a project for years knows where everything is—which folder has the R&D batch data, who to ask about a parameter decision made eighteen months ago. A new hire doesn't have any of that. If you want to understand what happened on an R&D batch, you message three people and hope someone remembers. The institutional knowledge that makes experienced engineers effective isn't in any system—it's in their heads, and when they leave, it leaves with them.

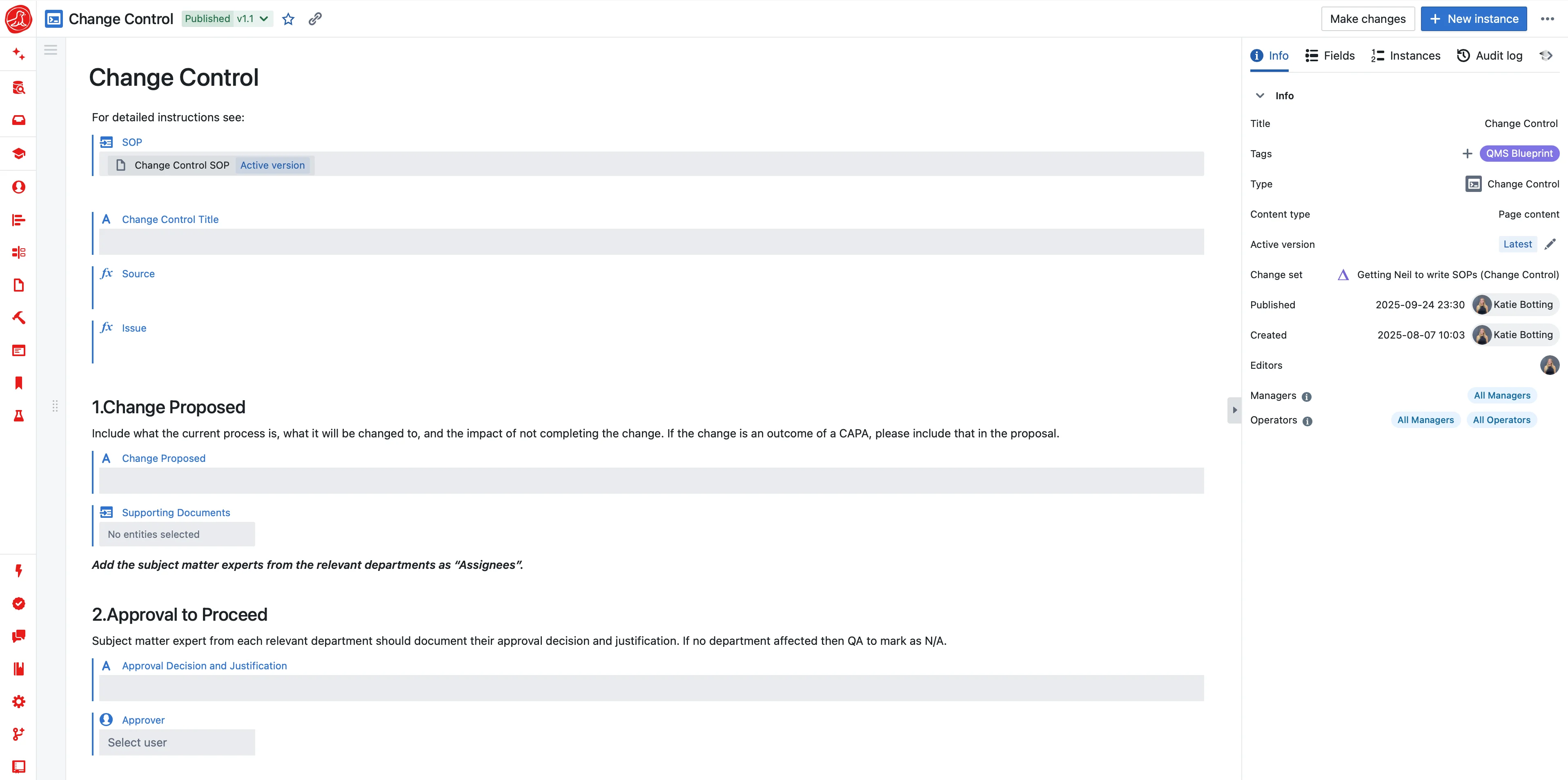

How Seal changes MSAT work

Today, processes are trapped in static documents. A Word file or PDF is a snapshot in time—a digital fossil that's difficult to reuse, impossible to compare, and a source of endless manual error during tech transfer. Seal changes this by treating your process not as a disposable document, but as a living, version-controlled product—a reusable digital asset. Move from monolithic templates to a flexible library of version-controlled building blocks: a unit operation, an analytical method, a material specification. These can be composed to create a Platform Process that defines the what and why, then configured for a specific context by binding site-specific parameters to create a Site-Specific Process ready for tech transfer. Tech transfer becomes seamless promotion from development to validated GMP, with improvements systematically managed and propagated.

Multi-site organizations face a fundamental tension: global standards enable efficiency and regulatory consistency, but local sites have legitimate differences. Traditional approaches force uniformity, resulting in shadow systems and processes that exist on paper but not in practice. Seal takes a different approach: global building blocks define the what and why, local sites configure the how. Boston runs at 2000L on Bioreactor Model X, Dublin runs at 5000L on Bioreactor Model Y, Singapore runs at 1000L—all executing the same Platform Process with different Site-Specific Configurations. When the core process improves, the improvement propagates automatically. Sites own their execution while maintaining traceability to global process definitions.

Instead of asking MSAT to manually re-enter data from documents, AI automatically extracts structured information—but nothing enters the database without human review. The biggest barrier to automation isn't technology—it's trust. MSAT teams don't trust aggregation that happens in a black box. That's why Seal uses a changeset model: you see every proposed change, edit what's wrong, and approve what's right.

Drop files—PDFs, JMP files, Excel workbooks, PowerPoint decks—and AI extracts CPPs, ranges, rationale, and experimental context into structured records. Extracted data appears as a diff, just like a code review. You see exactly what will be added, modified, or linked. Edit if the AI got something wrong. Approve when it's correct. Only approved changesets enter the database, with full audit trail of who reviewed what, when, and why.

Once published, process knowledge links to GMP execution. Batch records reference development rationale. Deviations pull historical context. Change assessments compile evidence automatically. Tech transfer doesn't require re-keying parameters from documents. Deviation investigations don't start with manual data assembly. CPV analyses connect to the assumptions they're verifying.

Seal can operate alongside existing ELN, MES, LIMS, and QMS systems or serve as an all-in-one platform. The value is in eliminating the manual transcription and reassembly that currently consumes MSAT time and introduces errors.

When MSAT is supported by a system aligned to its role, tech transfers shorten, investigations close faster, manufacturing stabilizes earlier, and change cycles accelerate. Traceability improves, inspection readiness increases, and confidence in technical decisions strengthens. These improvements come from preserving, connecting, and reusing process knowledge across the lifecycle—reduced operational risk and faster, more predictable time to market.

Capabilities