QC LIMS

Test. Pass. Release.

The batch waited three weeks. Manufacturing took one day. QC paperwork took twenty. Release testing that clears batches in days, not weeks.

The batch that waited three weeks.

Manufacturing finished Tuesday. The batch sat in quarantine while release testing took eleven days. Then an OOS result required investigation—another seven days. Three weeks for a batch that should have shipped in one. The science took two days. The paperwork took nineteen.

QC labs are bottlenecks because they're running modern analytical chemistry on 1990s information systems. Results get transcribed from instruments to notebooks to spreadsheets to LIMS—four copies of the same number, four chances for error. Specifications live in binders that nobody quite trusts. OOS investigations require data assembly from five different sources before analysis can even begin.

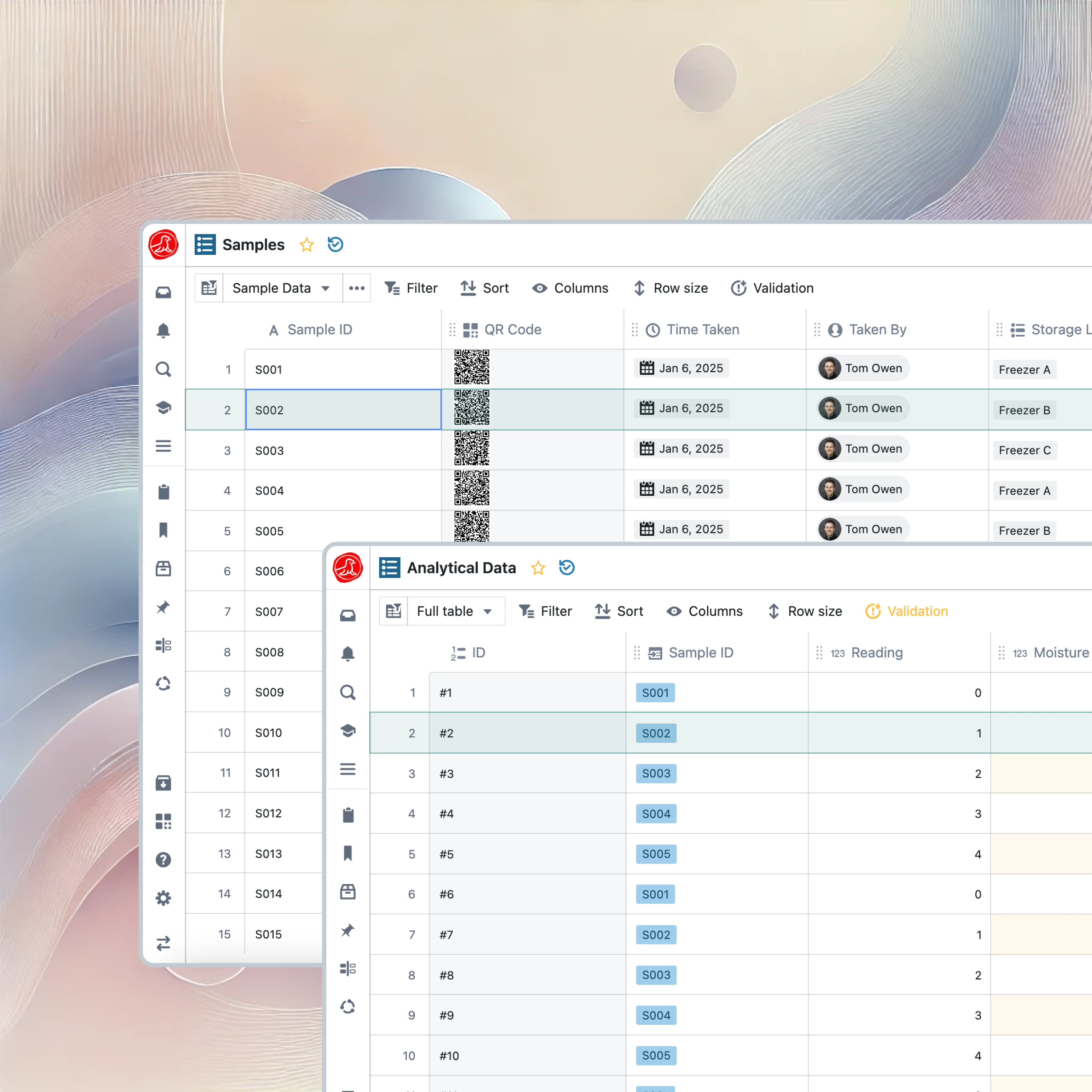

From sample to release

Every sample follows the same path: receive, test, check, review, release. The difference is how much friction exists at each transition.

When a sample arrives in Seal, specifications are already assigned based on the material and product. Testing queues based on manufacturing priority, so urgent batches move first. Results check against specs automatically the moment they enter the system. Reviewers see everything in one view—no compiling, no chasing. When release is approved, disposition flows downstream to MES and inventory without anyone copying data.

Specifications and automatic checking

"What's the potency spec for this product?" Should be a one-second answer. Instead it's a fifteen-minute hunt through registration files, validation protocols, master batch records, and last year's email from Regulatory Affairs when the limits changed.

Seal maintains specifications as structured data with version history. Potency for Product X at release: 95.0% to 105.0%. When specifications change, the system tracks which version applied to which batch—complete traceability without document archaeology. And the moment a result enters the system, it's checked automatically. The analyst finishes an HPLC run, potency comes back 94.2%, and below 95.0% means OOS—flagged instantly. The analyst can't miss it, the reviewer can't approve it. The system enforces what policy requires.

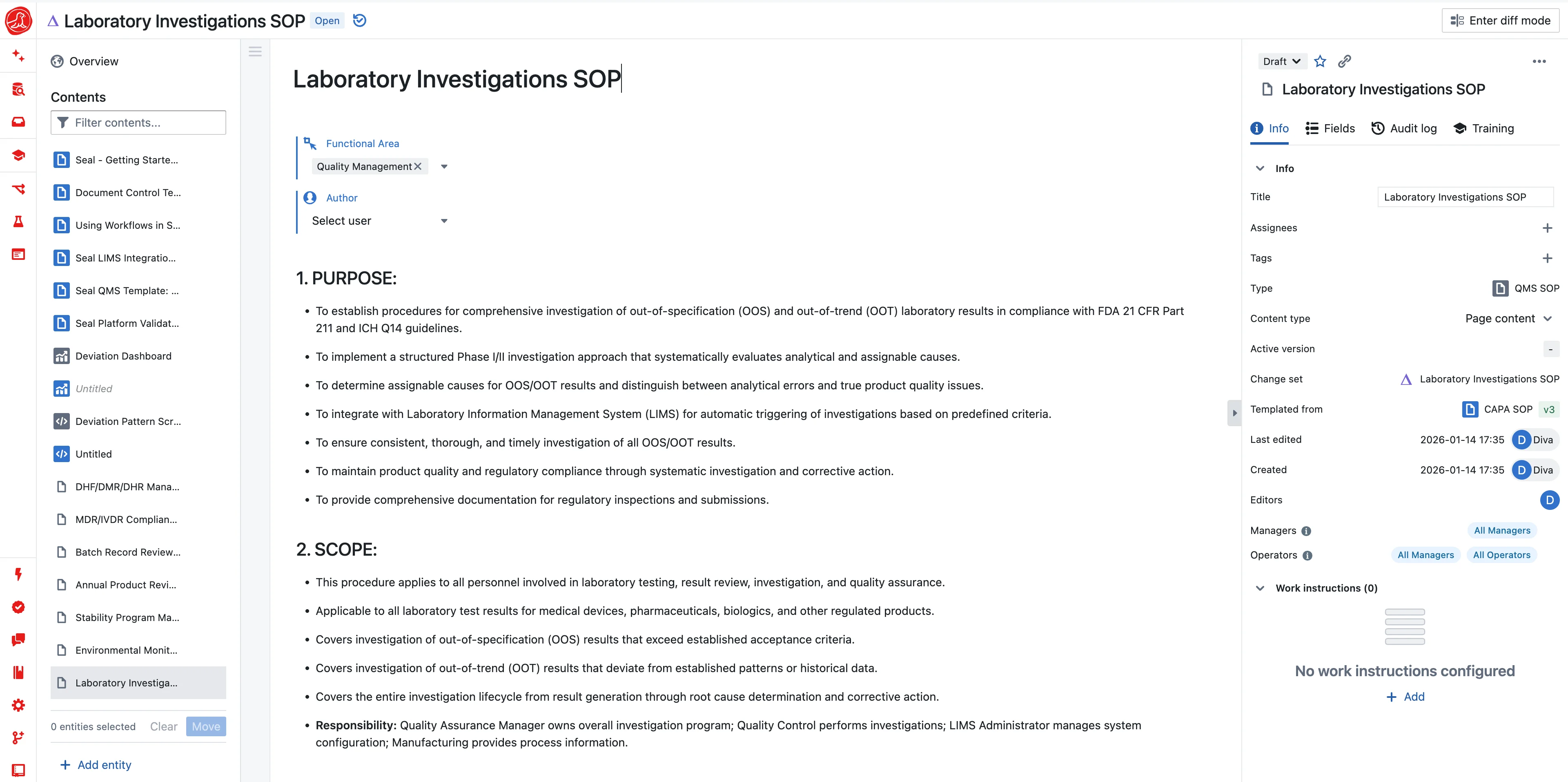

OOS investigation with context

An out-of-specification result triggers investigation. In most organizations, someone opens a blank form and spends hours gathering context: What instrument was used? What's its calibration status? What standards were run? What other samples were in the sequence? Was the analyst qualified?

Seal opens investigations with all that context already populated—sample, method, result, instrument calibration, standards used, analyst training. All linked. The investigator can start analyzing immediately instead of assembling documentation. Two-week investigations become two-day investigations, not by cutting corners but by eliminating the data-gathering phase entirely.

Direct instrument integration

The traditional path runs through four transcriptions: HPLC generates data, analyst prints, transcribes to notebook, enters into spreadsheet, copies to LIMS. Four opportunities for error. No audit trail connecting the final number to its source.

The Seal path is direct: instrument generates data, data flows to LIMS. Zero transcription. Complete audit trail. The number in the batch record is the number from the instrument. Direct integrations exist for Agilent, Waters, Thermo Fisher, and other major vendors. For instruments without native integration, standard data formats and custom parsers achieve the same goal.

This isn't a research LIMS—it's built for executing validated methods and releasing batches. Method development belongs in the ELN, where scientists iterate freely. By the time a method reaches QC, it's validated: parameters fixed, acceptance criteria defined, procedure locked. When an analyst runs a release test, they execute a validated procedure with enforced parameters. The system doesn't allow deviation.

Beyond release testing

Raw materials arrive faster than QC can test them. Seal manages incoming inspection as a workflow rather than a pile—materials queue based on manufacturing need, supplier CoA data compares against your results, and disposition flows to inventory the moment testing completes.

Environmental monitoring works the same way. A single elevated viable count might be noise. Three over two weeks is a pattern. Most EM programs catch excursions—they tell you the limit was exceeded. Seal catches drift—it shows you the counts are increasing even while still within limits, so you can address problems before they become excursions, before they become investigations, before they become batch holds.

Stability that predicts

Stability studies run for years. Most labs manage them in spreadsheets—pull schedules in one file, results in another, trending done manually when someone remembers. At month 24, someone discovers a problem that was visible at month 6.

Seal manages the complete stability lifecycle: protocol definition, automated pull schedules, chamber condition monitoring, result entry with the same instrument integration as release testing. But the difference is predictive trending. As timepoints accumulate, the system calculates degradation rates and projects where you're heading. You see potential shelf life problems at month 6, not month 24 when reformulation means starting the clock over.

From method development to QC

The method that works in R&D isn't automatically ready for QC. Development happens in the ELN with freedom to iterate—change column, adjust gradient, optimize parameters. But when a method moves to QC, it must be validated and locked.

Seal bridges this transition. The method developed in ELN becomes the basis for the QC method in LIMS. Same parameters, same calculations—but now with enforced specifications and required system suitability. When an analyst runs the method, they can't change critical parameters. The validation defines what's fixed; the system enforces it.

This isn't just convenience. When an OOS occurs and someone asks "was this the validated method?", the answer is structural. The method version is locked. The parameters match validation. The evidence is automatic.

Trending that warns

OOS means the limit was exceeded—investigation required. OOT means results are drifting while still within limits—attention warranted before investigation becomes mandatory.

Seal calculates control limits from your historical data. Results outside normal variation but inside specification get flagged. Three consecutive results trending in one direction trigger alerts. The system sees patterns across batches that individual analysts miss.

A potency trending downward over six batches—still passing, but drifting—is a signal. Investigate now, when you have options. Not later, when you have a recall.

One platform

QC doesn't exist in isolation. Batches come from manufacturing, results affect disposition, and failures trigger quality events. Because Seal LIMS lives on the same platform as MES and QMS, these connections are native rather than integrated.

When a batch fails testing, MES batch status updates automatically. When an OOS triggers investigation, the deviation opens in QMS with full context attached. Certificate of Analysis generation works the same way: all data already lives in the system, so CoAs generate with one click.

When all tests pass, the batch should release immediately. Seal presents batch status in real time—all tests complete, within specification, instrument qualifications current, analyst training verified. Everything visible in one view. One review. One approval. Ship.

AI that builds your lab

Setting up a LIMS traditionally means weeks of configuration—defining specifications, creating test methods, configuring instrument integrations, building workflows. AI compresses this dramatically.

Drop your regulatory documents—CTD modules, approved specifications, validation reports. AI extracts specifications into structured data: test names, acceptance criteria, method parameters, sampling requirements. You review what was extracted, edit where needed, approve through change control. Weeks of manual data entry become hours of review.

Method setup works the same way. "Create a release testing method for potency by HPLC with system suitability, standard curve, and sample analysis." AI generates the method structure with appropriate parameters, calculations, and acceptance criteria based on your validation report. Your analysts review and refine rather than building from scratch.

Instrument integrations configure conversationally. "Connect to our Waters Alliance HPLC and parse potency results from the summary report." AI generates the integration configuration and data parsing rules. Test with sample data, verify the mapping, deploy.

Every AI proposal is transparent and reviewable. Specification changes go through your approval process. Method configurations require validation. AI accelerates setup; your quality team controls the system.

And AI works throughout operations. Running samples—AI flags anomalies against historical patterns before formal OOS. "This result is within spec but 2 standard deviations below your last 50 runs." Investigating OOS—AI suggests likely causes based on similar investigations. "73% of OOS on this method traced to mobile phase preparation." Trending stability data—AI detects drift across timepoints and predicts shelf life implications.

The lab that took months to configure is operational in weeks. The investigations that took weeks complete in days. The batches that waited three weeks ship in three days.

Getting Started

You've been promised "start small" before. Then the vendor wanted to configure everything. Then validation took six months. Then the instrument integrations didn't work with your specific Agilent setup. Then you were running two systems with no end in sight.

This works differently.

Pick one workflow. The release test you run most often, or the incoming inspection backlog that makes manufacturing wait. Configure specifications and methods for that workflow—AI extracts from your existing documents, you review. Connect one instrument—we have standard integrations for major vendors, and we build custom parsers when needed. Run samples through the system next to your legacy process.

The first batch takes a day to configure and a week to validate. By week three, you're releasing batches through Seal. Your legacy LIMS stays running for everything else. No big bang. No parallel operation hell. Just one workflow that works better.

From there, expand. Add the next workflow. Connect the next instrument. Each step is incremental. Each step delivers value before the next starts. Analysts learn through real work, not training sessions they'll forget.

The lab that took three weeks to release a batch? You won't get there overnight. But you'll get there faster than you think—because you're not waiting for a perfect system. You're building one, workflow by workflow.

Capabilities